Compress your Mesh

How to transparently compress response traffic between your microservices, consistently, using EnvoyFilters in Istio

If you're running any reasonably sized HA service on Public Clouds, you'll no doubt be paying the "zone tax". The cost varies a little across cloud providers but you're typically looking at $0.01/GB, which doesn't sound a lot - but if you're reading this it's likely you've seen how that adds up.

I've discussed one way to help reduce these cost in the past, using Locality Aware Routing to preferentially keep traffic within the same zone (which has other benefits such as improved response times), however it's not always feasible or even right to use that approach, so in this post I'm going to explore another option, trading a bit of CPU time to compress (response) data between microservices.

What it looks like

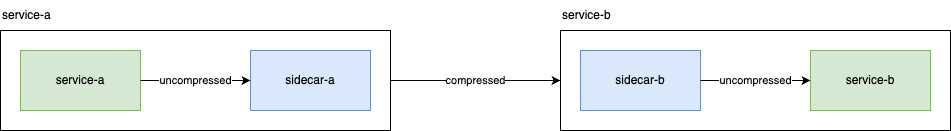

What we're going to do is configure the traffic between sidecar-a and sidecar-b to be compressed, completely transparently to service-a and service-b. The traffic between the service and its local sidecar will remain uncompressed, it's on the local network and we achieve nothing (and spend cpu) be compressing that.

To do that, we're going to use Envoy Filters to inject a compressor filter on the response part of sidecar-b, to compress the response, and we're going to add a de-compressor filter on the request side of sidecar-a to both request a compressed response, and transparently decompress it. So lets look at those two bits of configuration:

Compressor Filter (response direction)

I've put the whole filter up in a gist here because it's reasonably large, but lets dig into a few bits of the configuration.

Firstly, I've decided to use Gzip (type.googleapis.com/envoy.extensions.compression.gzip.compressor.v3.Gzip). Envoy supports a few different compressors though so have a read of the docs.

Next, min_content_length: 860, this specifies in bytes the minimum response size before bothering to encrypt. You'll need to experiment yourself but there's a tail off in value when you compress small objects, and some very small objects can end up larger!

remove_accept_encoding_header, this stops the Accept-Encoding header being passed to your app. Remember what we said above about how there's little point in compressing data between the sidecar and your application as they're on localhost. Many common web frameworks will have gzip filters pre-wired so this ensures only plain text gets returned.

SIDECAR_INBOUND, this filter is being applied to the inbound listeners, and response_direction_config_enabled means it's being done on the response.

window_bits: 14, This is actually the default value now, however I prefer to be explicit rather than implicit, especially because:

The decompression window size needs to be equal or larger than the compression window size

I've actually found in testing you ned the compressor to be lower, not equal to, therefore 15 results in occasionally truncated data.

If you apply that envoy filter to the istio-system namespace, you should find that any application you request gzip from, (eg curl -H 'acccept-encoding: gzip' from a pod will return gzip providing the response size is > 860 bytes).

Decompressor Filter (request direction)

So we've got all our destination sidecars able to return gzip, when we ask for it, the next thing we want to do is ensure that the source of the request does two things:

- Always requests

gzip, by sending theAccept-encoding: gzipheader - Uncompresses it before passing it to the app (

service-ain our example).

We do this using a Decompressor (type.googleapis.com/envoy.extensions.filters.http.decompressor.v3.Decompressor). The decompressor filter will add the accept-encoding: gzip header for you. Again i've popped this in a gist, and here are the key points:

window_bits: 15, as above, it's important these two numbers align.

SIDECAR_OUTBOUND, we're applying this patch to the outbound requests.

Again, apply this to the istio-system namespace so it gets applied to all your Sidecars.

Now to repeat the test above (curl -H 'acccept-encoding: gzip' on a pod), you should find your get back a plain text response. That's because the outbound sidecar has now handled decompression for you. You can validate this by looking at the istio proxy logs, or ksniffing the traffic.

The result

All response traffic from your services will be compressed if it's over 860 bytes, without you needing to touch your applications in any way. Hopefully, this should bring your bill down a bit too!

The other advantage in my eyes is consistency. We have python, nodejs, java, ruby, etc running on our platform. Some people have implemented compression handlers in their apps, others haven't. They all perform compression differently. This ensures that all data between our services is compressed consistently.

Like every decision though, there are consequences you should consider:

- You're trading network bandwidth for CPU time, and memory pressure on the

Sidecar. In my testing, the difference was reasonably small and justifiable when considering the egress costs. Chances are some of your apps and services were already doing this, so you might see the CPU time shift from your app container to your sidecar. - You might be compressing unnecessarily, for example if you have a microservice that is acting as a gateway, and simply streaming responses through, you've decompressed and compressed that hop without any reason to.