Upgrading Istio to 1.6

Upgrade to Istio 1.6 was quite painful. This post details all the issues we faced and how we tackled them - to hopefully save others some time.

Those of you who know me, or follow my twitter, know that the upgrade from 1.5 to 1.6 has been quite painful. It was further compounded by the fact we also opted to stay with the microservice deployment model for 1.4 -> 1.5 (as the changes to telemtryv2 were enough for us to be tackling in a single release), so this latest upgrade also encompassed a mandatory move to istiod.

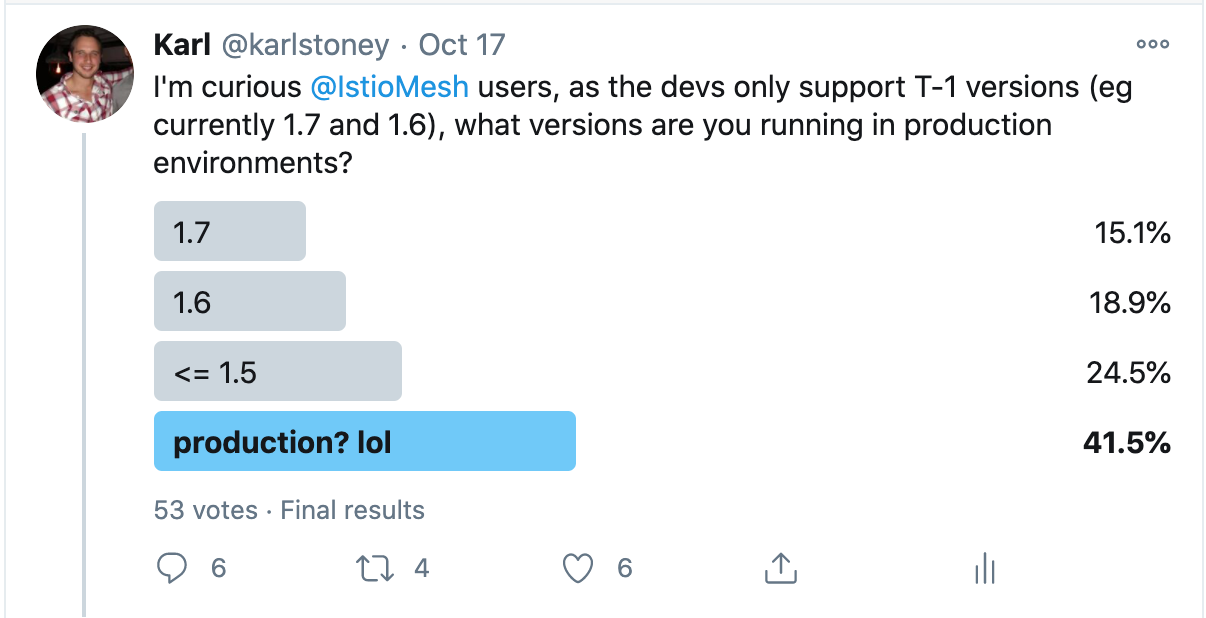

A recent poll I put out on Twitter shows that at least 25% of folks who responded are on <= 1.5:

So my friends, this post is for you. I've tried to compile each of the "gotchas" I encountered as part of the upgrade process.

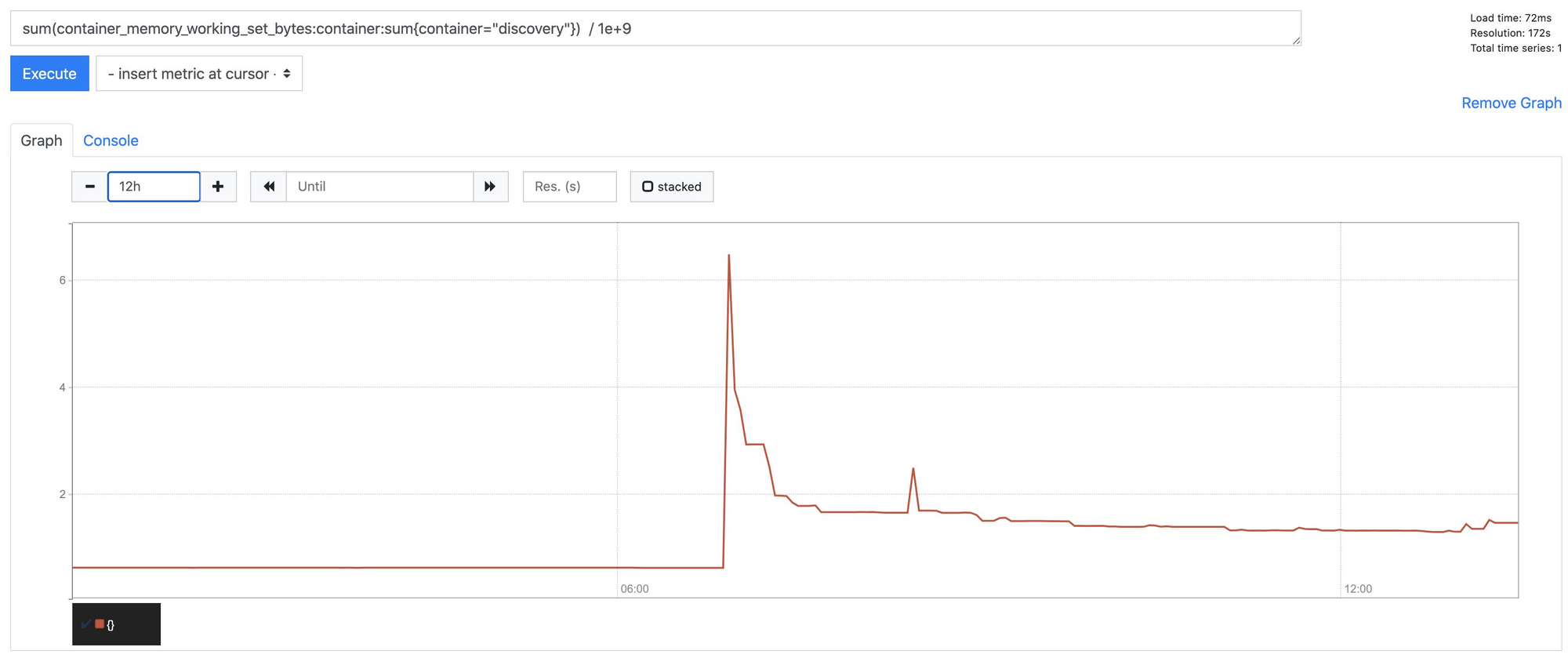

Increased Memory Footprint for istiod

For us, istiod is using about twice as much memory as pilot/discovery did in 1.5, but loosely the same amount of CPU.

This is addressed in 1.7, and as of yesterday has been merge/back ported to 1.6, so I anticipate it'll be in the next 1.6 release (1.6.14).

More details of the actual problem can be found here: https://github.com/istio/istio/pull/25532

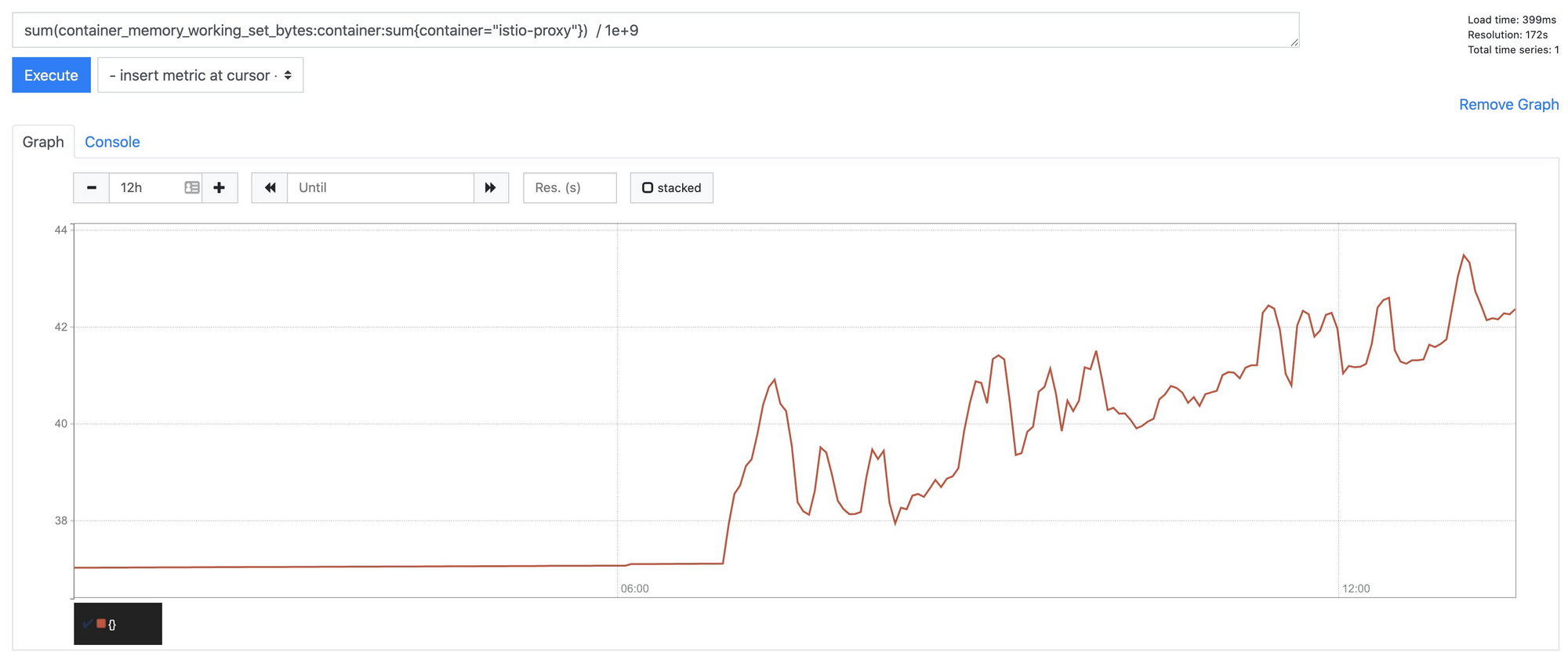

Increased Memory Footprint for istio-proxy

Cluster wide, we've seen approximately a 15% increase in the memory footprint for istio-proxy taking it from around 37gb to 44gb. Whilst this isn't a complete disaster, it's certainly worth being aware of the additional memory pressure on your nodes.

This appears to be caused by the move to SDS (Secret Discovery Service). Certificates are now requested and managed directly by the proxy and no longer stored in kubernetes secrets. Between 1.5 and 1.6 there seems to be approximately a 10mb increase per proxy.

Which leads us on nicely to...

Secret Discovery Service

This impacted us in two ways. Firstly, Prometheus can no longer mount certificates to make mTLS requests, causing all your application monitoring to start failing once the 1.5 certificate expires (around 45 days from when you switch to SDS). As a result; you need to run a sidecar on Prometheus now, which does nothing but procuring certificates and writing them to disk. I've already blogged about how, so check that out.

The second way SDS impacts you is the number of ConfigMaps and Secrets on your cluster. istiod will create a new configmap in every namespace called istio-ca-root-cert which for us is about 450 new resources - so just be aware of that.

Also; once all of your sidecars have moved to SDS, you can remove all the old Istio secrets (we had about 600), if you dare - this one liner will do it for you*:

kubectl get secrets --all-namespaces | grep "istio.io/key-and-cert" | awk '{print "kubectl -n "$1" delete secret "$2}'* use at your own risk!

Default trustDomain behaviour changed

If you've iterated over previous Istio releases it's likely you've got .Values.global.trustDomain: '' in your configuration, and subsequently in your istio-system/istio ConfigMap.

This worked fine in 1.5 as an empty string was treated as cluster.local. However in 1.6 the empty string is taken literally and all of your mTLS requests will start failing unless you explicitly set that to cluster.local first.

More details are in this issue: https://github.com/istio/istio/issues/27828

Broken mTLS (inbound port redirection)

If you use mTLS and upgrade your control plane in place (so you have a 1.6 control plane with 1.5 data plane), you could hit https://github.com/istio/istio/issues/28026 which means all of your traffic will instantly start failing.

This is address by https://github.com/istio/istio/pull/28111, which will be in the next 1.6 release. You can enable the legacy listener support by ensuring you set PILOT_ENABLE_LEGACY_INBOUND_LISTENERS=true on istiod. If you don't want to wait for the next release (1.6.14) you can use gcr.io/istio-testing/pilot:1.6-alpha.2259094caeb07b4cf631c99b71ea4a9d3840aa4e for your pilot image.

Migrating from MeshPolicy to PeerAuthentication

meshpolicies.authentication.istio.io has been removed in 1.6, and replaced with peerauthentications.security.istio.io. If, like us, you still use meshpolicies to specify cluster wide mTLS, like this:

apiVersion: authentication.istio.io/v1alpha1

kind: MeshPolicy

metadata:

name: default

namespace: istio-system

spec:

peers:

- mtls: {}

You'll need to replace it with:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: default

namespace: istio-system

spec:

mtls:

mode: STRICT

However in our case whilst upgrading the control plane in place, we encountered some race conditions which meant there was a period between these two resources swapping that globally messed up mTLS. As a result, we decided to keep the original MeshPolicy in place whilst creating the PeerAuthentication policy as they aren't mutually exclusive. We subsequently tidied up the MeshPolicy after we had upgraded everything to 1.6.

CPU Limits have snuck into istio-proxy

Disabling CPU limits on istio-proxy (in fact, many things) is a very common thing to do, as docker cfs throttling can be far too aggressive. We achieved this by setting this config on 1.5:

proxy:

resources:

requests:

cpu: 30m

memory: 65Mi

limits:

memory: 512MiHowever it seems the templating is wrong in 1.6, and subsequently any omission of the cpu limit results in it being defaulted to 2000m. This was highlighted to us when we observed some throttling on istio-proxy on our ingress-nginx services.. we've modified the injection-template manually to remove it for now.

More details can be found at https://github.com/istio/istio/issues/28465

Config Validation, forcefully enabled

As I've described in this issue, the .Values.global.configValidation: false setting doesn't actually work in 1.6. You might be asking why we have it disabled anyway - which is a good question, personally we use istioctl to validate our manifests as part of CI/CD, so the additional validation adds complexity without much gain for us.

In order to effectively disable this feature, you'll need to delete the istiod-istio-system ValidatingWebhookConfiguration from your install manifests and also set VALIDATION_WEBHOOK_CONFIG_NAME='' on the istiod environment or you'll be spammed with warnings every 1 minute.

If you decide to move forward with config validation enabled, you should also be aware of this issue. In summary, istiod tests the webhook by sending an invalid Gateway configuration to kubernetes. If, like us, you monitor apiserver_admission_webhook_admission_duration_seconds_count you'll see failures, and if you check the istiod logs you'll see:

{"level":"info","time":"2020-10-28T17:26:10.966351Z","scope":"validationServer","msg":"configuration is invalid: gateway must have at least one server"}You'll find this as baffling as us if you don't have any Gateways!

EnvoyFilter protobuf parser changed

If you've followed my other post about how to tweak the EnvoyFilter for metrics to reduce the amount of cardinality that istio-proxy generates, then you'll likely hit https://github.com/istio/istio/issues/27851. This'll manifest as a silent failure and simply no metrics at all.

The underlying cause is a change in the parser they use in EnvoyFilter. More details are in the GitHub issue.

PeerAuthentication portLevelMtls behaviour... odd?

I wasn't sure what to call this one but if like us you define ingress on your Sidecar resource, like this (one port for the app and another port for prometheus metrics):

apiVersion: networking.istio.io/v1beta1

kind: Sidecar

metadata:

labels:

app: foo

name: sidecar

spec:

ingress:

- defaultEndpoint: 127.0.0.1:8080

port:

name: http-app

number: 8080

protocol: HTTP

- defaultEndpoint: 127.0.0.1:9090

port:

name: http-metrics

number: 9090

protocol: HTTP

workloadSelector:

labels:

app: fooAnd use PeerAuthentication to disable mTLS on the metrics port:

apiVersion: security.istio.io/v1beta1

kind: PeerAuthentication

metadata:

name: default

spec:

selector:

matchLabels:

app: foo

mtls:

mode: STRICT

portLevelMtls:

9090:

mode: DISABLEIt doesn't work. 9090 still has mTLS and your scripts will start failing. More information can be found in: https://github.com/istio/istio/issues/27994 and some examples of the inconsistent interaction between Sidecar and PeerAuthentication can be found in Johns comments.

We worked around this by ensuring all deployments specify the traffic.sidecar.istio.io/includeInboundPorts annotation. So taking the above example we would use traffic.sidecar.istio.io/includeInboundPorts: "8080". This ensures that when istio-init runs, 9090 is simply ignored and not routed through envoy. We actually quite like this anyway as its extremely declarative.

Summary

Total time taken: 3 weeks. Hopefully all of this will help you do it less.

Whilst there have been a lot of change between versions 1.4 to 1.6, istiod, sds, telemtryv2 amongst others - I do honestly believe they're all for the better and I am crossing both my fingers (and toes) that there will be some stability in the architecture moving forward making future releases less Big Bang.

It's also really easy to focus on acute painful windows like this, and easy to forget what life was like before we had the level of observability through metrics and tracing that Istio provides for us, and how much time was sunk into debugging the most basic of problems.