CI for Istio Mesh

How we build, test and release Istio across 5 environments using a custom release process + chart.

In my Last Blog Post I mentioned that I "don't trust operators". This raised a few eyebrows with people considering the Istio operator is one of the primary supported means of installing Istio. So I've decided to take a little time out and explain why I said that, and also dig into how we build, test and deploy Istio at Auto Trader.

To add some context to the following sections it's worth noting that we exclusively use helm to deploy any Kubernetes IaC. In fact, we've been deploying Istio using Helm (upgrading) since it was in Alpha over 2 years ago.

Warning: This is much longer than my typical blog posts! So grab a brew.

Operators

Call me old fashioned but I like to see my Infrastructure (in which I include Kubernetes resources) declaratively defined as code, I like to see that code in Git. Operators at Auto Trader are not used for deploying resources, they're used for runtime concerns such as warming services by modifying existing VirtualServices. I admit the line is a little grey, but hopefully I can explain why we air on the side of limiting their use.

Bringing Failure Forward

Knowing exactly what is going to be applied to my kubernetes cluster before it is deployed enables us to have processes that can detect problems before they're even applied. In our CI pipelines, all helm charts (including Istio) go through a phase which runs helm template, the output from the template is then passed through KubeVal and IstioCTL Validate to detect schema issues. It is far better to have your pipeline fail at this stage than after a helm install has failed, because at that point you're potentially in a broken state - and need to roll back before your next release.

We also have around 25 custom Validators which check for a whole variety of problems that have caught us out in the past:

- check_istio_inbound_ports_annotation... Pass

- check_deployment_hooks... Pass

- check_istio_serviceentry_port_protocol... Pass

- check_schema... Pass

- check_cronjob_periods... Pass

- check_curl_in_probes... Pass

- check_manifests... Pass

- check_namespace... Pass

- check_release_name... Pass

- check_duplicate_resources... Pass

- check_jvm_max_heap_config... Pass

- check_reserved_custom_service_names... Pass

- check_same_release_and_namespace... Pass

- check_reserved_ports... Pass

- check_image_name... Pass

- check_duplicate_environment_variables... Pass

- check_empty_config_section_in_values... Pass

- check_service_discovery... Pass

- check_network_policies... Pass

- check_helmfile_secrets... Pass

- check_istio_manifests... Pass

- check_resource_memory_limits_and_requests... Pass

- check_yaml_validity... Pass

- check_manifest_api_versions... PassTo put this into perspective, we have around 400 services, deploy into production environments 300-400 times per day. We keep deployment data for 90 days, and haven't had a deployment failure due to manifest issues in that time.

Eyes-on differential

This is kind of an extension to the above, but a huge part of our Istio upgrade process is generating the manifests from our current version, vs the new version, and simply looking at the differences. I've lost track of the amount of problems we're found with this method that would have bitten us at deployment time.

- https://github.com/istio/istio/issues/30756 - Ingress gateways being deployed even when we'd disabled them

- https://github.com/istio/istio/issues/27868 - Validating Webhooks still get installed despite us disabling them

- https://github.com/istio/istio/issues/19224 - Templated manifests contained

creationTimestampwhich is invalid for new resources - https://github.com/istio/istio/issues/19227 - The port on

zipkinwas wrong - https://github.com/istio/istio/issues/19226 - The istio

ClusterRolecontained more permissions than it needed to

There's more, but I won't bore you. I cannot stress enough how important it is to have eyes-on your Infrastructure as Code.

Security

Nothing on our cluster has permission to modify cluster scoped resources at runtime. So we're talking ClusterRole, ClusterRoleBinding and so on. All changes that could modify such resources need to be in CI so we can understand what changed, and why, and when.

Istio Charts

So as I mentioned before, we deploy Istio using helm, and we've been doing so since the early Alpha days. Old time Istio users at this point will be asked "but they stopped supporting helm" and that's true.

We never actually used the istio Helm charts to deploy Istio

Our approach has always been, and still is:

- Template all istio manifests, historically using

helm template .but more recently usingistioctl manifest generate - Post-process the output through some custom mutation scripts to add standard labels, mutate in ways the istio configuration options do not allow us to

- Split those manifests into two charts,

istio, which contains the main Istio resources as well as anyCustomResourceDefinitionsandistio-crdwhich contains the implementations of the previousCustomResourceDefinitions

This is all just one big ruby script that we run in our git repo. There's some Auto Trader-isms in it, so I'll try and logically break it down.

Download and Template

First of all you need your istiooperator.yaml. We're not actually going to deploy the operator, but we're going to use the same configuration to invoke istioctl manifest generate.

Then you need to obviously download your target version of Istio, and run the template command:

release = 'https://github.com/istio/istio/releases/download/1.9.1/istio-1.9.1-osx.tar.gz'

version = release.match(/.*\/istio-(.*)-.*/)

def download_release(release, version)

path = "src/#{version}"

if not File.exists?(path)

FileUtils.mkdir_p path

file_name = release.split("/").last

if not File.exists?("/tmp/#{file_name}")

puts "Downloading:"

puts " - location #{release}"

`wget --quiet #{release} -O /tmp/#{file_name}`

puts ""

end

puts "Extracting:"

puts " - file /tmp/#{file_name}"

`tar xf /tmp/#{file_name} -C src/#{version} --strip 1`

puts ""

end

end

# Download the version of istio we want

download_release(release, version)

# Template it

system("src/#{version}/bin/istioctl manifest generate -f istiooperator.yaml > src/#{version}/generated.yaml") or raise 'Failied to generate manifests!'At this point, you'll have src/1.9.1/generate.yaml which is one massive YAML file of all the resources required to deploy Istio. So we want to read that file, and pipe it into either istio or istiocrd. I can't share my code here as it's simply too Auto Trader but in principle:

- Load

src/1.9.1/generate.yaml - Split by

--- Psych.loadthat manifest- Mutate it any way you want (we add some labels, fix a few things like mutating the injector-config in ways the istiooperator doesn't allow)

- Write it to either

charts/istio/templatesorcharts/istio-crd/templatesdepending on if it's aCustomResourceDefintionimplementation or not.

Once you've got this script down, going between istio release is simply a case of updating your target version and running your ./generate-manifests.rb script, to template your two istio repos.

The two repos are important because your CustomResourceDefinition resources need to be applied to the cluster before the implementations of the CRDs, otherwise helm will fail. I'll cover how we manage that a little later.

Eyes-On & Validation Time

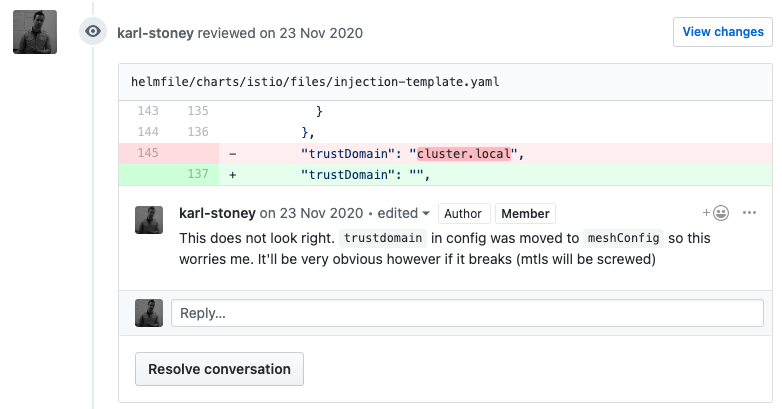

As mentioned previously, at this point it's eyes on. Look at everything that's changed in your manifests, question things that don't look right:

Once you're happy with all the changes, get it merged into master and trust the aforementioned pipeline KubeVal, IstioCTL validate and custom validators to detect any other issues you may have missed.

Deploy Time

We currently don't use canary releases. We've been deploying Istio since before canary upgrades were even a thing. Whilst I'm not fundamentally against canary releases of Istio, they add a fair bit of complexity to your deployment. We've personally been able to detect issues with control plane -> data plane version differences way before production environments so haven't had motivation to change it. And this is how we do it:

Control Plane

To deploy the Istio control plane, we utilise Helmfile. This allows us define a relationship between the istio and istio-crd charts, as you can see below we state that istio-crd needs istio, which means, istio will always deploy first, and istio-crd will deploy upon completion

helmDefaults:

timeout: 900

releases:

- name: istio

namespace: istio-system

chart: ./charts/istio

values:

- environments/values.yaml

- name: istio-crd

namespace: istio-system

chart: ./charts/istio-crd

values:

- environments/values.yaml

needs:

- istio-system/istioIstio state that the control plane is compatible with the dataplane T-1, so a 1.9 control plane will work with a 1.8 data plane. Typically, this is true, but we have been bitten a few times so keep your eyes out.

Data Plane

Once your control plane has been upgraded, it's now time to update your data plane. This involves performing a rolling restart of all workloads that are using the previous version of the Sidecar.

We've written some tooling to automate this process. I can't share it as it's a TypeScript app but logically it:

- Gets the

istio-system/istio-sidecar-injectorConfigMap, and extract thevalues.global.proxy.image - Iterates over each

Namespacein your cluster, and get theDeplyments,Statefuletsetc from the Namespace - Checks if they have the

sidecar.istio.inject: trueannotation - If they do, get the

Podsfor the workload via thematchLabelsof the workload - Checks the

istio-proxycontainerimage:tag against the previously looked upvalues.global.proxy.imageand if they don't match, trigger a rollout of the Workload

We personally have a parallelism level of 3 workloads at a time. As we have around 400 workloads this takes around 3 hours to fully complete. The process stops if any application fails to roll out.

Environments

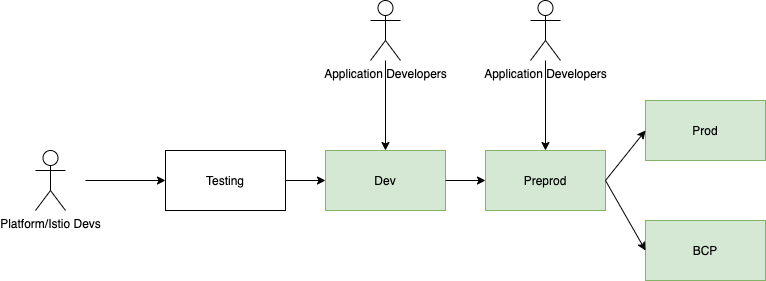

We have three qualifying environments before production and the above process is repeated in each of them.

Istio will first go into the Testing environment. Following which we have a large set of tests which test all possible permutations of deployments our platform allows, for example:

- Deployments with one container

- Deployments with Multiple containers

- Deployments with Cronjobs

- Deployments in Permissive mode

- Statefulset versions of all of the above

- All different varieties of

VirtualServiceandDestinationRuleconfiguration

As a general rule the new version will remain in each environment as follows:

testing- until we confident that there are no obvious issues following the upgrade. This could be a fairly short amount of time but often we need to iterate on the releases as we find problems and await fixes from the upstream Istio projectdev- 1 daypreprod- 1 week

Finding issues in Testing

As you've noted from the above diagram, testing is our opportunity to detect problems before we start impacting developers. We detect 90% of our problems with Istio at the testing phase. Whilst our test suite is quite thorough, we find most failures in Istio to be novel. As a result, we run two different tools in testing generating a variety of load:

- https://github.com/Stono/testyomesh - Generates loads of different request and response profiles across a set of 3 microservices, which constantly causing deployments. Great for detecting issues where you have different sidecar versions for example https://github.com/istio/istio/issues/30437, which exposed 503 errors when you have a 1.7

Sidecarand a 1.8 data plane. - istio-test-app - this is a tool I haven't OSS'd because it's quite Auto Trader specific. It's similar to

testyomesh, but contains applications which are more typical to Auto Trader deployments, for example CronJobs.

We have eyes on

- Istio metrics, eg

istio_requests_total{response_code="503"}, novel failures will also always manifest here first - CPU and RAM, for example https://github.com/istio/istio/issues/28652 we noticed a 20% increase in memory that was not in the release notes.

- Prometheus cardinality, in several releases we've seen prometheus cardinality jump up. Keep an eye on

count({name=~"istio.*"}) by (name)amongst others - Push times, convergence times, pilot errors and so on. Use the provided Istio dashboards, or make your own:

If we're satisfied with the "roll forward" of the dataplane, we'll then roll it back. Just to make sure we can. And we'll have eyes on the same data & alerts.

Finding issues in Dev and Beyond

If an Istio release gets past testing then we're almost certain it will make it to production. However, there is always the risk of novel failures that we were missing tests for that would manifest once Istio is mixed with the variety of applications that Auto Trader deploy.

Dev contains about 25% of our core and most critical applications. We leave Istio in here for a day or two to detect any incompatibilities with the deployed applications. Any problems, we roll back and recreate the issue with a test in our testing environment.

From there we move into Preprod which is a 100% like-for-like copy of our Production environment. Typically we'll leave Istio here for around about a week as we've been bitten by some slow burning issues in the past like MTLS certificate renewal.

At this point, we're confident it'll be OK to hit production, so that's where it goes. In the unexpected event we have an issue in production, we have bcp as a cold standby running the previous version of Istio that we can route traffic to. I honestly cannot remember the last time we had a service impacting issue in Production as a result of an Istio release.

Summary

Hopefully this gives you some insight as to how we manage Istio releases at Auto Trader. Sure, it isn't as simple as deploying the operator and a single YAML file, but we've got an excellent track record of production release of Istio since the alpha days without service impact so I firmly believe investing in your CI pipeline for Istio will pay dividends.

I also know that it sounds like a lot of work - but the vast majority of it is fully automated. Realistically Auto Trader spend around 2-3 days per quarter upgrading Istio if it goes to plan, 5-10 days if it doesn't.