Upgrading to Istio 1.21.x

Upgrading Istio from 1.20 to 1.21. Issues with JWT auth in dynamicMetadata and AuthorizationPolicies.

We've had a good run Istio - 1.18 and 1.19 were a breeze compared to previous releases and 1.20 didn't warrant me even writing a blog! Unfortunately I was bitten during 1.21 (partly my own doing) so I'm back once again for another episode of Upgrading Istio!

Remember this is just what bit me, my setup will be different to yours - so don't forget to read the change notes and upgrade notes.

JWT Dynamic Metadata

As soon as we rolled out Istio into our testing environment, all our tests around JWT based authentication began to fail.

Now I admit we're doing something a little left field here, we have an EnvoyFilter that extracts the incoming JWT metadata which was historically stored in the dynamic metadata by envoy.filters.http.jwt_authn - suddenly our code, which looked like this:

local meta = request_handle:streamInfo():dynamicMetadata():get("envoy.filters.http.jwt_authn")

local claims = meta["our-issuer"]Began to return null for our-issuer. The change was subtle, but undocumented. In the Dynamic Metadata - the jwt_authn filter no longer stores it under the key of issuer, and instead just uses payload, so the "fix" is:

local meta = request_handle:streamInfo():dynamicMetadata():get("envoy.filters.http.jwt_authn")

local claimsOld = meta["our-issuer"]

local claimsNew = meta["payload"]

local claims = claimsNew or claimsOldNote that we had to look for both here, to be able to transparently roll out the new Istio version. The other option you could take is to have an EnvoyFilter target specifically sidecars from 1.21 and 1.22 separately, but that was more effort for us.

I reported it here and the response was:

We can update the notes to mention the internal change to xDS, but we usually reserve the right to do small changes like this.

Whilst true, it kinda sucks - and personally doesn't feel like a small change. However I suppose if you play with fire, you get burned. Moral of the story here is if you're going to use EnvoyFilters at all, make sure you have a good robust test suite around your implementation. Best bet is to avoid them.

I'd love to see a little bit more documentation around the things you can access from an EnvoyFilter, such as DynamicMetadata. I don't think some documentation of these APIs is at odds with Istio reserving the right to change it without notice.

Matches on AuthorizationPolicy requestPrincipal changed

Actually reported by another Istio user on the same thread, they found that during 1.22 upgrade - their AuthorizationPolicy stopped matching on their string requestPrincipal, which was a concatenation of their iss and sub eg:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: allow-ingress-infra-w-token

spec:

action: ALLOW

rules:

- from:

- source:

namespaces:

- istio-system

principals:

- cluster.local/ns/istio-system/sa/foo-ingressgateway-service-account

requestPrincipals:

# i RequestAuthentication URL (<ISS>/<SUB>)

- 'https://token.actions.githubusercontent.com/repo:ORGNAME/REPONAME:ref:refs/heads/main'To "fix" it, they needed to match explicitly on the iss and sub:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: allow-ingress-infra-w-token

spec:

action: ALLOW

rules:

- from:

- source:

namespaces:

- istio-system

principals:

- cluster.local/ns/istio-system/sa/FOO-ingressgateway-service-account

requestPrincipals: ['*']

when:

- key: request.auth.claims[iss]

values: [https://token.actions.githubusercontent.com]

- key: request.auth.claims[sub]

values:

- repo:ORG/REPO:ref:refs/heads/mainPerformance

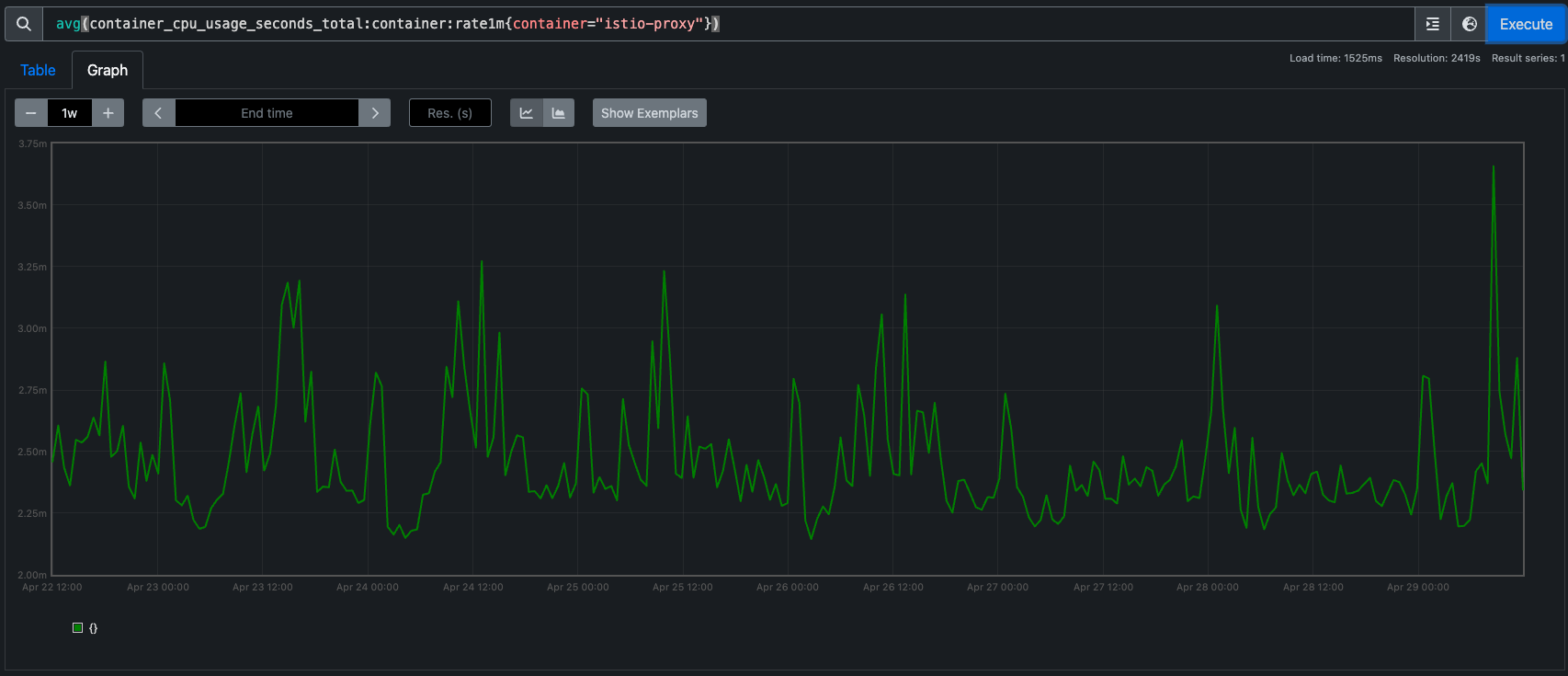

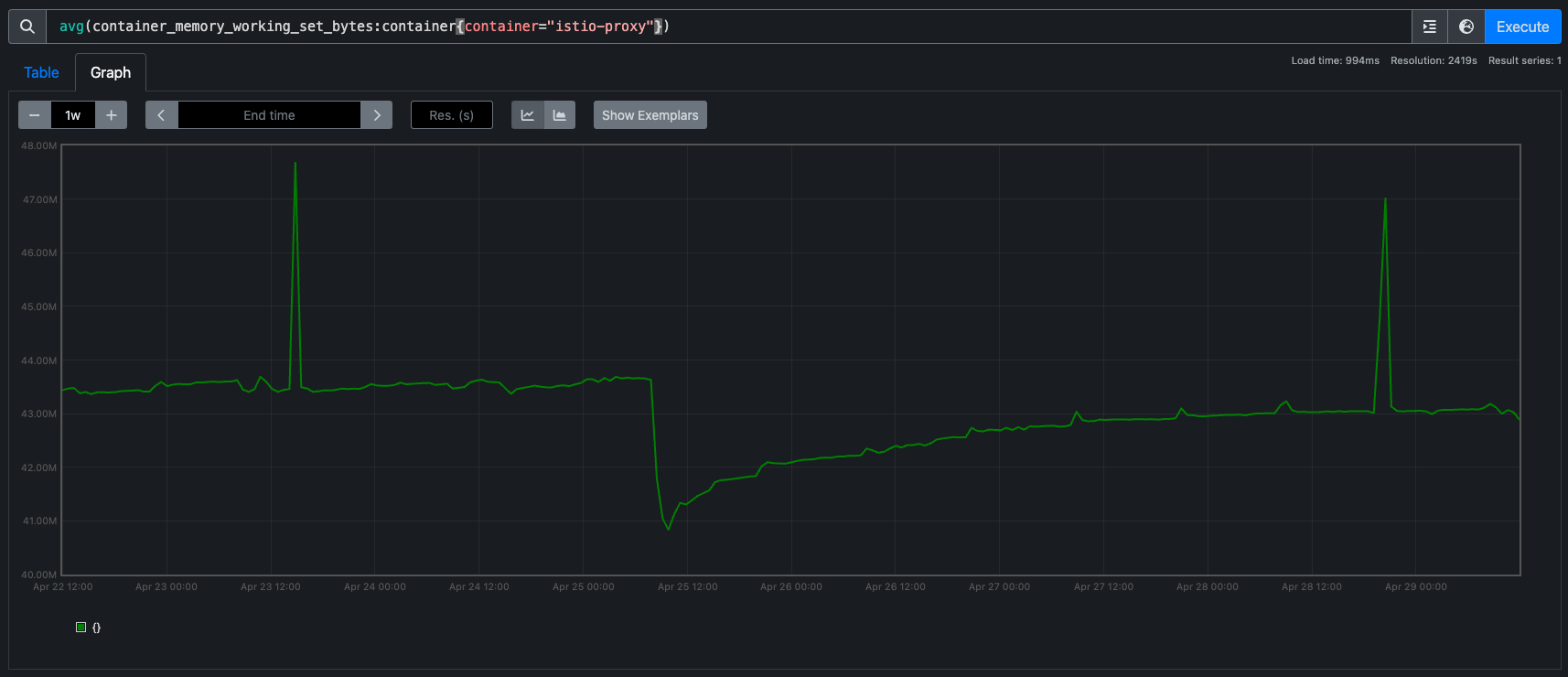

I'm going to start popping in some performance metrics as we've seen regressions in the past. This release we haven't observed any measurable differences in CPU or Memory consumed by the sidecars:

And istiod has broadly remained the same too. Latencies across all our applications are unchanged (we use a lot of L7 features too).

Conclusion

Not wildly painful, however we still haven't promoted this to production. For context we have multiple non-production environments, testing, dev, and preprod, each grows in scale and complexity of services deployed.

We've been in preprod for a week now, we'll probably leave it a month to see if anything else crops up and will update here if it does.